As the year comes to a close, it’s time to consider the significance of new beginnings, such as the first general purpose computer, the first demonstration of hypertext or the first occasion Time Magazine named the personal computer the first non-human winner of its “Man of the Year” award. Let’s explore these and other critical moments in tech history:

December 9, 1968 – The “Mother of All Demos”

On this day Douglas Engelbart gave a 90-minute presentation at the 1968 Fall Joint Computer Conference in San Francisco that in the decades since has come to be known as the “mother of all demos.” His team at the Stanford Research Institute had spent six years creating its oN-Line System (NLS), which was intended to show how computers could augment human abilities and intelligence.

Before the presentation, some called Engelbart a “crank.” Then he proceeded to demonstrate the building blocks of modern computing: the mouse, hyperlinks, video conferencing, collaborative live editing of documents and more. All of these feel familiar to us today, but seeing these concepts in action within a single system in 1968 shook the computing community. Engelbart was said to be “dealing lightning with both hands.”

Immediate changes didn’t come – it took decades for his system design principles to find their way into commercial products. The Apple Macintosh and Microsoft Windows built on his graphical user interface ideas in the 1980s, hyperlinks strung together the web in the 1990s, while real-time document collaboration has only recently started to change workplaces. Engelbart, who died in 2013, lived to see his storied demo become the reality of modern work.

December 22, 1882 – The First Electric Christmas Lights

Christmas trees used to be lit with wax candles, but in 1882, Edward Hibberd Johnson decided to string his tree with electric lights. He worked for Thomas Edison’s Illumination Company and would later become its vice president. Johnson wired 80 red, white and blue bulbs by hand along a single cord. The spectacle drew passersby to Johnson’s window, as well as press attention, but Christmas lights wouldn’t become common for a few more decades for a few reasons.

For one, people still distrusted electric light. It was considered a fire hazard. The lights were also expensive – a set of 12 cost about $350 in 1900. And if you could afford them, you also needed to hire an electrician to set them up. By the 1930s, these concerns had been addressed, and Christmas lights went mainstream. Today, they’re still a way to mark technological advancement: incandescent bulbs have been largely replaced with LEDs driven by electronic circuits, and the lights can be controlled with a smart home system. Sadly, a technology to keep the strings untangled remains the stuff of dreams.

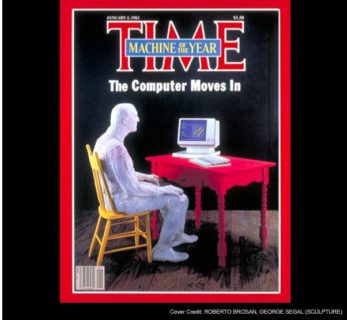

December 26, 1982 – Time Magazine Names the Personal Computer “Machine of the Year”

For the first time since it began its “Man of the Year” award in 1927, Time Magazine honoured a non-human in 1982: the personal computer. The cover story surveyed the many facets of computing, including how it would change the world of work. A poll conducted for the story found 73 percent of respondents “believed the computer revolution would enable more people to work at home.” There would be “computerized electronic message systems that could eventually make paper obsolete, and wall-sized, two-way TV teleconference screens that will obviate traveling to meetings.”

Staff writer Otto Friedrich noted that this change in the workplace was in its infancy: just 10 percent of typewriters in the 500 largest companies had been replaced. Friedrich himself wrote the story on a Royal 440 typewriter, and continued to use it with special permission from Time long after the magazine had shifted its workflow to computers.

December 26, 1791 – Charles Babbage is Born

Charles Babbage is best known for designing mechanical computers. His Difference Engine calculated mathematical tables, while his Analytical Engine was a general purpose device that included the elements of modern computers. It was instruction-based, and intended to be programmed with punch cards, directly inspired by the loom-programming cards created by Joseph-Marie Jacquard (who we met in July).

Babbage had difficulty funding the construction of his engines, and completed neither in his lifetime. In 1991, the London Science Museum completed a device based on his designs for his Difference Engine No. 2. It has 4,000 parts, weighs over three metric tons – and it works. A separate project is underway to build the Analytical Engine, with an eye to completing it by 2021. It will be the size of a small steam train, and run at a clock speed of 7Hz. For comparison’s sake, Sinclair’s ZX81, released in 1981, had a clock speed of 3.2MHz.

December 29, 1952 – The First Transistor-based Hearing Aid Goes on Sale

The Sonotone 1010 was the first hearing aid with a transistor, and interestingly, the first commercial application of the transistor of any kind. It weighed 88 grams and cost $229.50 at the time – about $3,000 today. Transistors allowed for less distortion, better battery life and a smaller size than the vacuum tube hearing aids that had been in use since the 1920s. For these reasons, they quickly replaced the old technology.

Today, some hearing aids can connect to iPhones and stream phone calls, music and podcasts. The lines between assistive, consumer and business technology are beginning to blur as Apple AirPods can also be used to join business calls hands-free. The Live Listen feature uses the microphone in an iPhone or iPad to stream sound to AirPods or compatible hearing aids, making it easier to hear in noisy environments. This brings convenience to the hearing-impaired community, and advances in hearing technology and digital processing to everyone.